ML之MIC:利用有无噪音的正余弦函数理解相关性指标的不同(多图绘制Pearson系数、最大信息系数MIC)

ML之MIC:利用有无噪音的正余弦函数理解相关性指标的不同(多图绘制Pearson系数、最大信息系数MIC)

目录

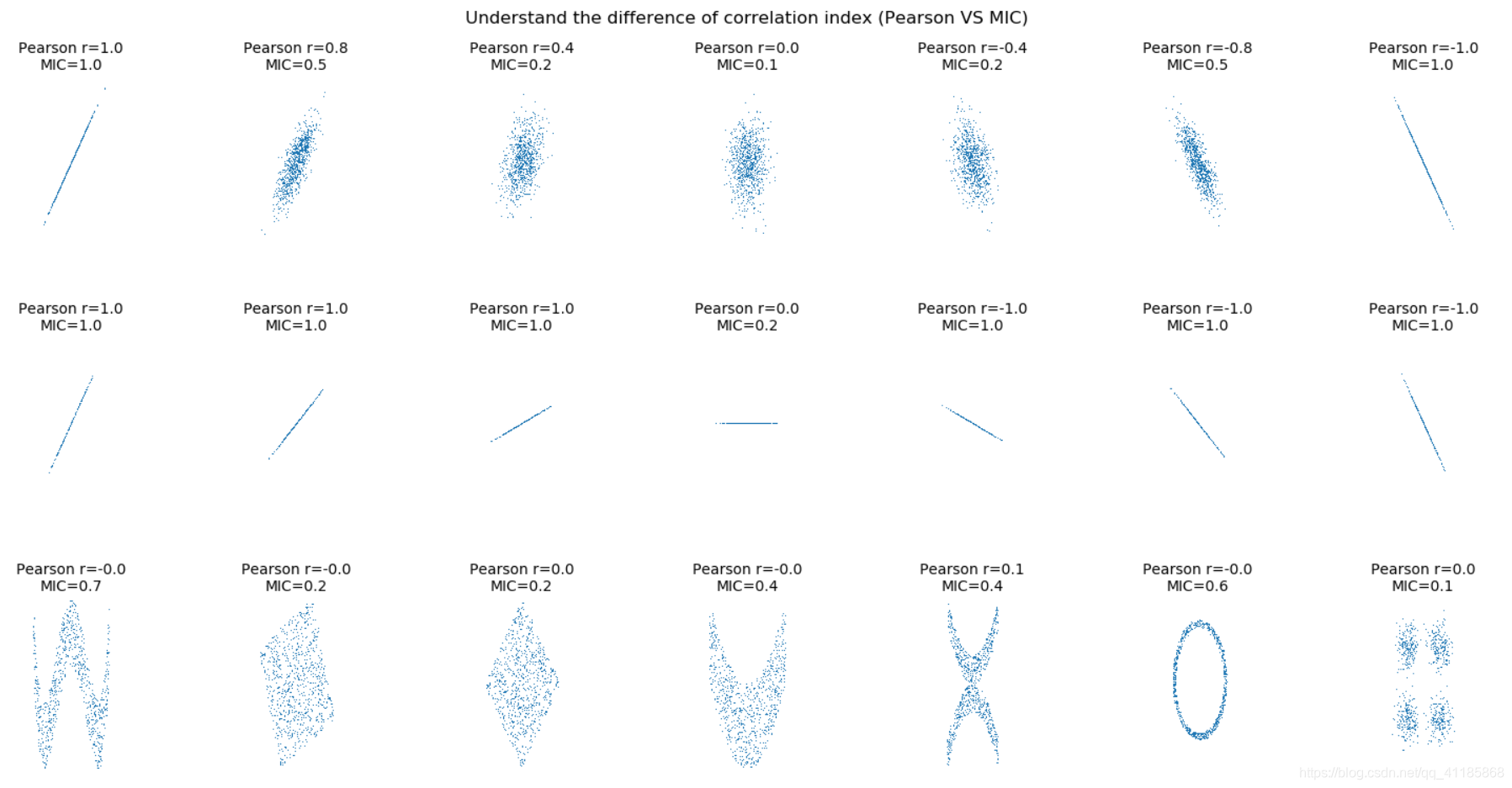

利用有无噪音的正余弦函数理解相关性指标的不同(多图绘制Pearson系数、最大信息系数MIC)

利用有无噪音的正余弦函数理解相关性指标的不同(多图绘制Pearson系数、最大信息系数MIC)

输出结果

实现代码

-

-

-

- ML之MIC:利用有无噪音的正余弦函数理解相关性指标的不同(多图绘制Pearson系数、最大信息系数MIC)

-

- import numpy as np

- import matplotlib.pyplot as plt

- from minepy import MINE

-

-

- def mysubplot(x, y, numRows, numCols, plotNum,

- xlim=(-4, 4), ylim=(-4, 4)):

-

- r = np.around(np.corrcoef(x, y)[0, 1], 1)

- mine = MINE(alpha=0.6, c=15)

- mine.compute_score(x, y)

- mic = np.around(mine.mic(), 1)

- ax = plt.subplot(numRows, numCols, plotNum,

- xlim=xlim, ylim=ylim)

- ax.set_title('Pearson r=%.1f\nMIC=%.1f' % (r, mic),fontsize=10)

- ax.set_frame_on(False)

- ax.axes.get_xaxis().set_visible(False)

- ax.axes.get_yaxis().set_visible(False)

- ax.plot(x, y, ',')

- ax.set_xticks([])

- ax.set_yticks([])

- return ax

-

- def rotation(xy, t):

- return np.dot(xy, [[np.cos(t), -np.sin(t)],

- [np.sin(t), np.cos(t)]])

-

- def mvnormal(n=1000):

- cors = [1.0, 0.8, 0.4, 0.0, -0.4, -0.8, -1.0]

- for i, cor in enumerate(cors):

- cov = [[1, cor],[cor, 1]]

- xy = np.random.multivariate_normal([0, 0], cov, n)

- mysubplot(xy[:, 0], xy[:, 1], 3, 7, i+1)

-

- def rotnormal(n=1000):

- ts = [0, np.pi/12, np.pi/6, np.pi/4, np.pi/2-np.pi/6,

- np.pi/2-np.pi/12, np.pi/2]

- cov = [[1, 1],[1, 1]]

- xy = np.random.multivariate_normal([0, 0], cov, n)

- for i, t in enumerate(ts):

- xy_r = rotation(xy, t)

- mysubplot(xy_r[:, 0], xy_r[:, 1], 3, 7, i+8)

-

- def others(n=1000):

- x = np.random.uniform(-1, 1, n)

- y = 4*(x**2-0.5)**2 + np.random.uniform(-1, 1, n)/3

- mysubplot(x, y, 3, 7, 15, (-1, 1), (-1/3, 1+1/3))

-

- y = np.random.uniform(-1, 1, n)

- xy = np.concatenate((x.reshape(-1, 1), y.reshape(-1, 1)), axis=1)

- xy = rotation(xy, -np.pi/8)

- lim = np.sqrt(2+np.sqrt(2)) / np.sqrt(2)

- mysubplot(xy[:, 0], xy[:, 1], 3, 7, 16, (-lim, lim), (-lim, lim))

-

- xy = rotation(xy, -np.pi/8)

- lim = np.sqrt(2)

- mysubplot(xy[:, 0], xy[:, 1], 3, 7, 17, (-lim, lim), (-lim, lim))

-

- y = 2*x**2 + np.random.uniform(-1, 1, n)

- mysubplot(x, y, 3, 7, 18, (-1, 1), (-1, 3))

-

- y = (x**2 + np.random.uniform(0, 0.5, n)) * \

- np.array([-1, 1])[np.random.random_integers(0, 1, size=n)]

- mysubplot(x, y, 3, 7, 19, (-1.5, 1.5), (-1.5, 1.5))

-

- y = np.cos(x * np.pi) + np.random.uniform(0, 1/8, n)

- x = np.sin(x * np.pi) + np.random.uniform(0, 1/8, n)

- mysubplot(x, y, 3, 7, 20, (-1.5, 1.5), (-1.5, 1.5))

-

- xy1 = np.random.multivariate_normal([3, 3], [[1, 0], [0, 1]], int(n/4))

- xy2 = np.random.multivariate_normal([-3, 3], [[1, 0], [0, 1]], int(n/4))

- xy3 = np.random.multivariate_normal([-3, -3], [[1, 0], [0, 1]], int(n/4))

- xy4 = np.random.multivariate_normal([3, -3], [[1, 0], [0, 1]], int(n/4))

- xy = np.concatenate((xy1, xy2, xy3, xy4), axis=0)

- mysubplot(xy[:, 0], xy[:, 1], 3, 7, 21, (-7, 7), (-7, 7))

-

- plt.figure(facecolor='white')

- mvnormal(n=800)

- rotnormal(n=200)

- others(n=800)

- plt.tight_layout()

-

- plt.suptitle('Understand the difference of correlation index (Pearson VS MIC)')

- plt.show()

-

-

-

-

-

-

-

-

网站声明:如果转载,请联系本站管理员。否则一切后果自行承担。

赞同 0

评论 0 条

- 上周热门

- Kingbase用户权限管理 2020

- 信刻全自动光盘摆渡系统 1749

- 信刻国产化智能光盘柜管理系统 1419

- 银河麒麟添加网络打印机时,出现“client-error-not-possible”错误提示 1014

- 银河麒麟打印带有图像的文档时出错 924

- 银河麒麟添加打印机时,出现“server-error-internal-error” 715

- 麒麟系统也能完整体验微信啦! 657

- 统信桌面专业版【如何查询系统安装时间】 633

- 统信操作系统各版本介绍 624

- 统信桌面专业版【全盘安装UOS系统】介绍 598

- 本周热议

- 我的信创开放社区兼职赚钱历程 40

- 今天你签到了吗? 27

- 信创开放社区邀请他人注册的具体步骤如下 15

- 如何玩转信创开放社区—从小白进阶到专家 15

- 方德桌面操作系统 14

- 我有15积分有什么用? 13

- 用抖音玩法闯信创开放社区——用平台宣传企业产品服务 13

- 如何让你先人一步获得悬赏问题信息?(创作者必看) 12

- 2024中国信创产业发展大会暨中国信息科技创新与应用博览会 9

- 中央国家机关政府采购中心:应当将CPU、操作系统符合安全可靠测评要求纳入采购需求 8

热门标签更多