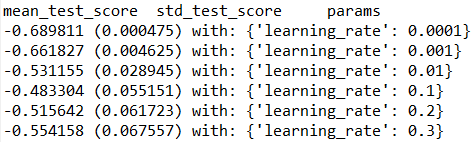

ML之Xgboost:利用Xgboost模型(7f-CrVa+网格搜索调参)对数据集(比马印第安人糖尿病)进行二分类预测

ML之Xgboost:利用Xgboost模型(7f-CrVa+网格搜索调参)对数据集(比马印第安人糖尿病)进行二分类预测

目录

输出结果

![]()

设计思路

核心代码

- grid_search = GridSearchCV(model, param_grid, scoring="neg_log_loss", n_jobs=-1, cv=kfold)

- grid_result = grid_search.fit(X, Y)

-

-

- param_grid = dict(learning_rate=learning_rate)

- kfold = StratifiedKFold(n_splits=10, shuffle=True, random_state=7)

- class GridSearchCV(-title class_ inherited__">BaseSearchCV):

- """Exhaustive search over specified parameter values for an estimator.

-

- Important members are fit, predict.

-

- GridSearchCV implements a "fit" and a "score" method.

- It also implements "predict", "predict_proba", "decision_function",

- "transform" and "inverse_transform" if they are implemented in the

- estimator used.

-

- The parameters of the estimator used to apply these methods are

- optimized

- by cross-validated grid-search over a parameter grid.

-

- Read more in the :ref:`User Guide <grid_search>`.

-

- Parameters

- ----------

- estimator : estimator object.

- This is assumed to implement the scikit-learn estimator interface.

- Either estimator needs to provide a ``score`` function,

- or ``scoring`` must be passed.

-

- param_grid : dict or list of dictionaries

- Dictionary with parameters names (string) as keys and lists of

- parameter settings to try as values, or a list of such

- dictionaries, in which case the grids spanned by each dictionary

- in the list are explored. This enables searching over any sequence

- of parameter settings.

-

- scoring : string, callable, list/tuple, dict or None, default: None

- A single string (see :ref:`scoring_parameter`) or a callable

- (see :ref:`scoring`) to evaluate the predictions on the test set.

-

- For evaluating multiple metrics, either give a list of (unique) strings

- or a dict with names as keys and callables as values.

-

- NOTE that when using custom scorers, each scorer should return a

- single

- value. Metric functions returning a list/array of values can be wrapped

- into multiple scorers that return one value each.

-

- See :ref:`multimetric_grid_search` for an example.

-

- If None, the estimator's default scorer (if available) is used.

-

- fit_params : dict, optional

- Parameters to pass to the fit method.

-

- .. deprecated:: 0.19

- ``fit_params`` as a constructor argument was deprecated in version

- 0.19 and will be removed in version 0.21. Pass fit parameters to

- the ``fit`` method instead.

-

- n_jobs : int, default=1

- Number of jobs to run in parallel.

-

- pre_dispatch : int, or string, optional

- Controls the number of jobs that get dispatched during parallel

- execution. Reducing this number can be useful to avoid an

- explosion of memory consumption when more jobs get dispatched

- than CPUs can process. This parameter can be:

-

- - None, in which case all the jobs are immediately

- created and spawned. Use this for lightweight and

- fast-running jobs, to avoid delays due to on-demand

- spawning of the jobs

-

- - An int, giving the exact number of total jobs that are

- spawned

-

- - A string, giving an expression as a function of n_jobs,

- as in '2*n_jobs'

-

- iid : boolean, default=True

- If True, the data is assumed to be identically distributed across

- the folds, and the loss minimized is the total loss per sample,

- and not the mean loss across the folds.

-

- cv : int, cross-validation generator or an iterable, optional

- Determines the cross-validation splitting strategy.

- Possible inputs for cv are:

- - None, to use the default 3-fold cross validation,

- - integer, to specify the number of folds in a `(Stratified)KFold`,

- - An object to be used as a cross-validation generator.

- - An iterable yielding train, test splits.

-

- For integer/None inputs, if the estimator is a classifier and ``y`` is

- either binary or multiclass, :class:`StratifiedKFold` is used. In all

- other cases, :class:`KFold` is used.

-

- Refer :ref:`User Guide <cross_validation>` for the various

- cross-validation strategies that can be used here.

-

- refit : boolean, or string, default=True

- Refit an estimator using the best found parameters on the whole

- dataset.

-

- For multiple metric evaluation, this needs to be a string denoting the

- scorer is used to find the best parameters for refitting the estimator

- at the end.

-

- The refitted estimator is made available at the ``best_estimator_``

- attribute and permits using ``predict`` directly on this

- ``GridSearchCV`` instance.

-

- Also for multiple metric evaluation, the attributes ``best_index_``,

- ``best_score_`` and ``best_parameters_`` will only be available if

- ``refit`` is set and all of them will be determined w.r.t this specific

- scorer.

-

- See ``scoring`` parameter to know more about multiple metric

- evaluation.

-

- verbose : integer

- Controls the verbosity: the higher, the more messages.

-

- error_score : 'raise' (default) or numeric

- Value to assign to the score if an error occurs in estimator fitting.

- If set to 'raise', the error is raised. If a numeric value is given,

- FitFailedWarning is raised. This parameter does not affect the refit

- step, which will always raise the error.

-

- return_train_score : boolean, optional

- If ``False``, the ``cv_results_`` attribute will not include training

- scores.

-

- Current default is ``'warn'``, which behaves as ``True`` in addition

- to raising a warning when a training score is looked up.

- That default will be changed to ``False`` in 0.21.

- Computing training scores is used to get insights on how different

- parameter settings impact the overfitting/underfitting trade-off.

- However computing the scores on the training set can be

- computationally

- expensive and is not strictly required to select the parameters that

- yield the best generalization performance.

-

-

- Examples

- --------

- >>> from sklearn import svm, datasets

- >>> from sklearn.model_selection import GridSearchCV

- >>> iris = datasets.load_iris()

- >>> parameters = {'kernel':('linear', 'rbf'), 'C':[1, 10]}

- >>> svc = svm.SVC()

- >>> clf = GridSearchCV(svc, parameters)

- >>> clf.fit(iris.data, iris.target)

- ... doctest: +NORMALIZE_WHITESPACE +ELLIPSIS

- GridSearchCV(cv=None, error_score=...,

- estimator=SVC(C=1.0, cache_size=..., class_weight=..., coef0=...,

- decision_function_shape='ovr', degree=..., gamma=...,

- kernel='rbf', max_iter=-1, probability=False,

- random_state=None, shrinking=True, tol=...,

- verbose=False),

- fit_params=None, iid=..., n_jobs=1,

- param_grid=..., pre_dispatch=..., refit=..., return_train_score=...,

- scoring=..., verbose=...)

- >>> sorted(clf.cv_results_.keys())

- ... doctest: +NORMALIZE_WHITESPACE +ELLIPSIS

- ['mean_fit_time', 'mean_score_time', 'mean_test_score',...

- 'mean_train_score', 'param_C', 'param_kernel', 'params',...

- 'rank_test_score', 'split0_test_score',...

- 'split0_train_score', 'split1_test_score', 'split1_train_score',...

- 'split2_test_score', 'split2_train_score',...

- 'std_fit_time', 'std_score_time', 'std_test_score', 'std_train_score'...]

-

- Attributes

- ----------

- cv_results_ : dict of numpy (masked) ndarrays

- A dict with keys as column headers and values as columns, that can be

- imported into a pandas ``DataFrame``.

-

- For instance the below given table

-

- +------------+-----------+------------+-----------------+---+---------+

- |param_kernel|param_gamma|param_degree|split0_test_score|...

- |rank_t...|

-

- +============+===========+============+========

- =========+===+=========+

- | 'poly' | -- | 2 | 0.8 |...| 2 |

- +------------+-----------+------------+-----------------+---+---------+

- | 'poly' | -- | 3 | 0.7 |...| 4 |

- +------------+-----------+------------+-----------------+---+---------+

- | 'rbf' | 0.1 | -- | 0.8 |...| 3 |

- +------------+-----------+------------+-----------------+---+---------+

- | 'rbf' | 0.2 | -- | 0.9 |...| 1 |

- +------------+-----------+------------+-----------------+---+---------+

-

- will be represented by a ``cv_results_`` dict of::

-

- {

- 'param_kernel': masked_array(data = ['poly', 'poly', 'rbf', 'rbf'],

- mask = [False False False False]...)

- 'param_gamma': masked_array(data = [-- -- 0.1 0.2],

- mask = [ True True False False]...),

- 'param_degree': masked_array(data = [2.0 3.0 -- --],

- mask = [False False True True]...),

- 'split0_test_score' : [0.8, 0.7, 0.8, 0.9],

- 'split1_test_score' : [0.82, 0.5, 0.7, 0.78],

- 'mean_test_score' : [0.81, 0.60, 0.75, 0.82],

- 'std_test_score' : [0.02, 0.01, 0.03, 0.03],

- 'rank_test_score' : [2, 4, 3, 1],

- 'split0_train_score' : [0.8, 0.9, 0.7],

- 'split1_train_score' : [0.82, 0.5, 0.7],

- 'mean_train_score' : [0.81, 0.7, 0.7],

- 'std_train_score' : [0.03, 0.03, 0.04],

- 'mean_fit_time' : [0.73, 0.63, 0.43, 0.49],

- 'std_fit_time' : [0.01, 0.02, 0.01, 0.01],

- 'mean_score_time' : [0.007, 0.06, 0.04, 0.04],

- 'std_score_time' : [0.001, 0.002, 0.003, 0.005],

- 'params' : [{'kernel': 'poly', 'degree': 2}, ...],

- }

-

- NOTE

-

- The key ``'params'`` is used to store a list of parameter

- settings dicts for all the parameter candidates.

-

- The ``mean_fit_time``, ``std_fit_time``, ``mean_score_time`` and

- ``std_score_time`` are all in seconds.

-

- For multi-metric evaluation, the scores for all the scorers are

- available in the ``cv_results_`` dict at the keys ending with that

- scorer's name (``'_<scorer_name>'``) instead of ``'_score'`` shown

- above. ('split0_test_precision', 'mean_train_precision' etc.)

-

- best_estimator_ : estimator or dict

- Estimator that was chosen by the search, i.e. estimator

- which gave highest score (or smallest loss if specified)

- on the left out data. Not available if ``refit=False``.

-

- See ``refit`` parameter for more information on allowed values.

-

- best_score_ : float

- Mean cross-validated score of the best_estimator

-

- For multi-metric evaluation, this is present only if ``refit`` is

- specified.

-

- best_params_ : dict

- Parameter setting that gave the best results on the hold out data.

-

- For multi-metric evaluation, this is present only if ``refit`` is

- specified.

-

网站声明:如果转载,请联系本站管理员。否则一切后果自行承担。

赞同 0

评论 0 条

- 上周热门

- 如何使用 StarRocks 管理和优化数据湖中的数据? 2951

- 【软件正版化】软件正版化工作要点 2872

- 统信UOS试玩黑神话:悟空 2833

- 信刻光盘安全隔离与信息交换系统 2728

- 镜舟科技与中启乘数科技达成战略合作,共筑数据服务新生态 1261

- grub引导程序无法找到指定设备和分区 1226

- 华为全联接大会2024丨软通动力分论坛精彩议程抢先看! 165

- 2024海洋能源产业融合发展论坛暨博览会同期活动-海洋能源与数字化智能化论坛成功举办 163

- 点击报名 | 京东2025校招进校行程预告 163

- 华为纯血鸿蒙正式版9月底见!但Mate 70的内情还得接着挖... 159

- 本周热议

- 我的信创开放社区兼职赚钱历程 40

- 今天你签到了吗? 27

- 如何玩转信创开放社区—从小白进阶到专家 15

- 信创开放社区邀请他人注册的具体步骤如下 15

- 方德桌面操作系统 14

- 用抖音玩法闯信创开放社区——用平台宣传企业产品服务 13

- 我有15积分有什么用? 13

- 如何让你先人一步获得悬赏问题信息?(创作者必看) 12

- 2024中国信创产业发展大会暨中国信息科技创新与应用博览会 9

- 中央国家机关政府采购中心:应当将CPU、操作系统符合安全可靠测评要求纳入采购需求 8

热门标签更多